Let’s take a look at the Hadoop project — what it is and when its use might be suited for your project. Hadoop is in use by an impressive list of companies, including Facebook, LinkedIn, Alibaba, eBay, and Amazon.

In short, Hadoop is great for MapReduce data analysis on huge amounts of data. Its specific use cases include: data searching, data analysis, data reporting, large-scale indexing of files (e.g., log files or data from web crawlers), and other data processing tasks using what’s colloquially known in the development world as “Big Data.”

In this article, we’ll cover: when to use Hadoop, when NOT to use Hadoop, the Hadoop basics (HDFS, MapReduce, and YARN), Hadoop-related tools, and Hadoop alternatives.

Once we go over all of the topics above, you should be able to confidently answer the question: Does Hadoop have something to offer my business?

When to Use Hadoop

Before discussing how Hadoop works, let’s consider some scenarios during which Hadoop might be the answer to your data processing needs. After that, we’ll cover situations when NOT to use Hadoop.

Before discussing how Hadoop works, let’s consider some scenarios during which Hadoop might be the answer to your data processing needs. After that, we’ll cover situations when NOT to use Hadoop.

Keep in mind that the Hadoop infrastructure and the Java-based MapReduce job programming require technical expertise for proper setup and maintenance. If these skills are too costly to hire or service yourself, you might want to consider other data processing options for your Big Data. (Skip to the alternatives to Hadoop!)

1. For Processing Really BIG Data:

If your data is seriously big — we’re talking at least terabytes or petabytes of data — Hadoop is for you. For other not-so-large (think gigabytes) data sets, there are plenty of other tools available with a much lower cost of implementation and maintenance (e.g., various RDBMs and NoSQL database systems). Perhaps your data set is not very large at the moment, but this could change as your data size expands due to various factors. In this case, careful planning might be required — especially if you would like all the raw data to always be available for flexible data processing.

2. For Storing a Diverse Set of Data:

Hadoop can store and process any file data: large or small, be it plain text files or binary files like images, even multiple different version of some particular data format across different time periods. You can at any point in time change how you process and analyze your Hadoop data. This flexible approach allows for innovative developments, while still processing massive amounts of data, rather than slow and/or complex traditional data migrations. The term used for these type of flexible data stores is data lakes.

3. For Parallel Data Processing:

The MapReduce algorithm requires that you can parallelize your data processing. MapReduce works very well in situations where variables are processed one by one (e.g., counting or aggregation); however, when you need to process variables jointly (e.g., with many correlations between the variables), this model does not work.

Any graph-based data processing (meaning a complex network of data depending on other data) is not a good fit for Hadoop’s standard methodology. That being said, the related Apache Tez framework does allow for the use of a graph-based approach for processing data using YARN instead of the more linear MapReduce workflow.

When NOT to Use Hadoop

Now let’s go over some instances where it would NOT be appropriate to use Hadoop.

1. For Real-Time Data Analysis:

Hadoop works by the batch (not everything at once!), processing long-running jobs over large data sets. These jobs will take much more time to process than a relational database query on some tables. It’s not uncommon for a Hadoop job to take hours or even days to finish processing, especially in the cases of really large data sets.

The Caveat: A possible solution for this issue is storing your data in HDFS and using the Spark framework. With Spark, the processing can be done in real-time by using in-memory data. This allows for a 100x speed-up; however, a 10x speed-up is also possible when using disk memory, due to its “multi-stage” MapReduce job approach.

2. For a Relational Database System:

Due to slow response times, Hadoop should not be used for a relational database.

The Caveat: A possible solution for this issue is to use the Hive SQL engine, which provides data summaries and supports ad-hoc querying. Hive provides a mechanism to project some structure onto the Hadoop data and then query the data using an SQL-like language called HiveQL.

3. For a General Network File System:

The slow response times also rule out Hadoop as a potential general networked file system. There are also other file system issues, as HDFS lacks many of the standard POSIX filestystem features that applications expect from a general network file system. According to the Hadoop documentation, “HDFS applications need a write-once-read-many access model for files. A file once created, written, and closed must not be changed except for appends and truncates.” You can append content to the end of files, but you cannot update at an “arbitrary” point.

4. For Non-Parallel Data Processing:

MapReduce is not always the best algorithm for your data processing needs. Each MapReduce operation should be independent from all the others. If the operation requires knowing a lot of information from previously processed jobs (shared state), the MapReduce programming model might not be the best option.

Hadoop and its MapReduce programming model are best used for processing data in parallel.

The Caveat: These state dependency problems can sometimes be partially aided by running multiple MapReduce jobs, with the output of one being the input for the next. This is something the Apache Tez framework does using a graph-based approach for Hadoop data processing. Another option to consider is using HBase to store any shared state in this large table system. These solutions, however, do add complexity to the Hadoop workflow.

What is Hadoop? — 3 Core Components

Hadoop consists of three core components: a distributed file system, a parallel programming framework, and a resource/job management system. Linux and Windows are the supported operating systems for Hadoop, but BSD, Mac OS/X, and OpenSolaris are known to work as well.

1. Hadoop Distributed File System (HDFS)

Hadoop is an open-source, Java-based implementation of a clustered file system called HDFS, which allows you to do cost-efficient, reliable, and scalable distributed computing. The HDFS architecture is highly fault-tolerant and designed to be deployed on low-cost hardware.

Unlike relational databases, the Hadoop cluster allows you to store any file data and then later determine how you wish to use it without having to first reformat said data. Multiple copies of the data are replicated automatically across the cluster. The amount of replication can be configured per file and can be changed at any point.

2. Hadoop MapReduce

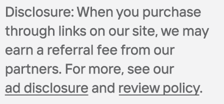

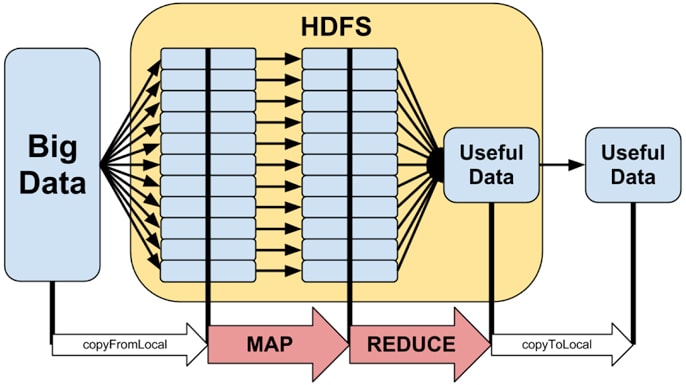

Hadoop is focused on the storage and distributed processing of large data sets across clusters of computers using a MapReduce programming model: Hadoop MapReduce.

With MapReduce, the input file set is broken up into smaller pieces, which are processed independently of each other (the “map” part). The results of these independent processes are then collected and processed as groups (the “reduce” part) until the task is done. If an individual file is so large that it will affect seek time performance, it can be broken into several “Hadoop splits.”

The Hadoop ecosystem uses a MapReduce programming model to store and process large data sets.

Here is a sample WordCount MapReduce program written for Hadoop.

3. Hadoop YARN

The Hadoop YARN framework allows one to do job scheduling and cluster resource management, meaning users can submit and kill applications through the Hadoop REST API. There are also web UIs for monitoring your Hadoop cluster. In Hadoop, the combination of all of the Java JAR files and classes needed to run a MapReduce program is called a job. You can submit jobs to a JobTracker from the command line or by HTTP posting them to the REST API. These jobs contain the “tasks” that execute the individual map and reduce steps. There are also ways to incorporate non-Java code when writing these tasks. If for any reason a Hadoop cluster node goes down, the affected processing jobs are automatically moved to other cluster nodes.

Hadoop Tools

Below you’ll find a list of Hadoop-related projects hosted by the Apache foundation:

Ambari: A web-based tool for provisioning, managing, and monitoring Apache Hadoop clusters, Ambari includes support for Hadoop HDFS, Hadoop MapReduce, Hive, HCatalog, HBase, ZooKeeper, Oozie, Pig, and Sqoop. Ambari also provides a dashboard for viewing cluster health factors, featuring heatmaps and the ability to view MapReduce, Pig, and Hive applications visually, as well as features to diagnose performance characteristics in a user-friendly manner.

Avro: Avro is a data serialization system.

Cassandra: Cassandra is a scalable multi-master database with no single points of failure.

Chukwa: A data collection system, Chukwa is used to manage large distributed systems.

HBase: A scalable, distributed database, HBase supports structured data storage for large tables.

Hive: Hive is a data warehouse infrastructure that provides data summaries and ad-hoc querying.

Mahout: Mahout is a scalable machine learning and data mining library.

Pig: This is a high-level data flow language and execution framework for parallel computation.

Spark: A fast and general compute engine for Hadoop data, Spark provides a simple and expressive programming model that supports a wide range of applications, including ETL, machine learning, stream processing, and graph computation.

Tez: Tez is a generalized data flow programming framework built on Hadoop YARN that provides a powerful and flexible engine to execute an arbitrary DAG of tasks to process data for both batch and interactive use-cases. Tez is being adopted by Hive, Pig, and other frameworks in the Hadoop ecosystem, and also by other commercial software (e.g., ETL tools), to replace Hadoop MapReduce as the underlying execution engine.

ZooKeeper: This is a high-performance coordination service for distributed applications.

Hadoop Alternatives

For the best alternatives to Hadoop, you might try one of the following:

Apache Storm: This is the Hadoop of real-time processing written in the Clojure language.

BigQuery: Google’s fully-managed, low-cost platform for large-scale analytics, BigQuery allows you to work with SQL and not worry about managing the infrastructure or database.

Apache Mesos: Mesos abstracts CPU, memory, storage, and other compute resources away from machines (physical or virtual), enabling fault-tolerant and elastic distributed systems to be built easily and run effectively.

Apache Flink: Flink is a platform for distributed stream and batch data processing that can be used with Hadoop.

Pachyderm: Pachyderm claims to provide the power of MapReduce without the complexity of Hadoop by using Docker containers to implement the cluster.

Hadoop Tutorial in Review

Hadoop is a robust and powerful piece of Big Data technology (including the somewhat bewildering and rapidly evolving tools related to it); however, consider its strengths and weaknesses before deciding whether or not to use it in your datacenter. There may be better, simpler, or cheaper solutions available to satisfy your specific data processing needs.

Hadoop is a robust and powerful piece of Big Data technology (including the somewhat bewildering and rapidly evolving tools related to it); however, consider its strengths and weaknesses before deciding whether or not to use it in your datacenter. There may be better, simpler, or cheaper solutions available to satisfy your specific data processing needs.

If you want to learn more about Hadoop, check out its documentation and the Hadoop wiki.

HostingAdvice.com is a free online resource that offers valuable content and comparison services to users. To keep this resource 100% free, we receive compensation from many of the offers listed on the site. Along with key review factors, this compensation may impact how and where products appear across the site (including, for example, the order in which they appear). HostingAdvice.com does not include the entire universe of available offers. Editorial opinions expressed on the site are strictly our own and are not provided, endorsed, or approved by advertisers.

Our site is committed to publishing independent, accurate content guided by strict editorial guidelines. Before articles and reviews are published on our site, they undergo a thorough review process performed by a team of independent editors and subject-matter experts to ensure the content’s accuracy, timeliness, and impartiality. Our editorial team is separate and independent of our site’s advertisers, and the opinions they express on our site are their own. To read more about our team members and their editorial backgrounds, please visit our site’s About page.