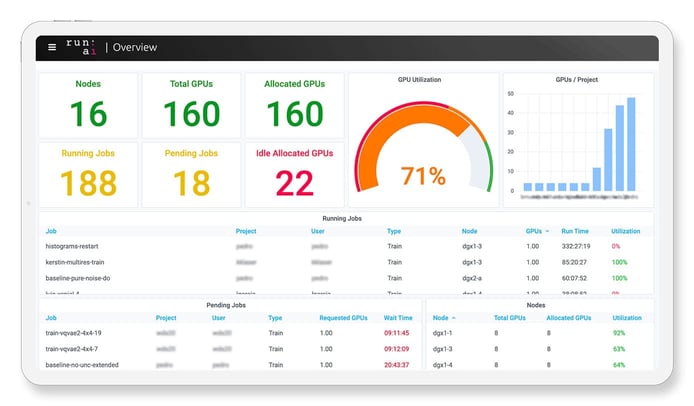

TL; DR: Run:AI is a compute-management platform that centralizes and virtualizes GPU resources, providing the robust infrastructure users need to support artificial intelligence (AI) and deep learning products. The technology gives data scientists visibility and control of resources to improve productivity. IT teams can leverage a virtual pool of resources that can be allocated across environments hosted on-premises or in the cloud. With new visibility tools on the horizon, Run:AI aims to equip IT and data science leaders with enhanced capabilities that will result in even better resource management.

The right ingredients, cookware, and utensils have the potential to turn a good cook into a great one, making it easier to whip up delicious meals and ensure they are prepared on time. The same goes for data scientists and IT professionals, although they consume computing power in the heat of a very different kitchen.

“When you give data scientists and tech teams the right infrastructure tools, they can build amazing artificial intelligence (AI) and deep learning products,” said Omri Geller, CEO and Co-Founder of Run:AI. “You don’t have to think of everything — just give them the compute they need, and they will work their magic.”

Run:AI, a compute-management platform, was created to do precisely that.

Omri Geller, CEO and Co-Founder, told us how Run:AI reduces the time and cost of training neural network models.

The technology empowers users to gain visibility into GPU consumption, control training times and costs, optimize deep learning training, and run data experiments at maximum speed.

In addition to optimizing computing resources, Run:AI allows teams to streamline human workflows — a valuable prospect considering the industry’s persistent talent gap.

“There are so few data scientists, and they are hard to hire, so companies have a difficult time scaling their data science teams,” Omri said. “So there’s an important trend here, which is to help data scientists do their jobs faster, better, and easier.”

The same goes for IT teams, which also hail from an industry plagued by a skills shortage. With Run:AI, these teams can gain real-time control and visibility into a virtual pool of computing resources that can be allocated across multiple sites, whether hosted on-premises or in the cloud.

Empowering Smart People to Build Smart Solutions

Omri and Dr. Ronen Dar founded Run:AI more than two years ago while working on graduate degrees under the same supervisor at Israel’s Tel Aviv University. Omri was pursuing his master’s degree while Ronen worked toward a Ph.D.

“One thing that we observed at the time is that when organizations start using more AI — and deep learning, specifically — to develop their solutions, they need a lot more computing power,” Omri said. “While there is a big revolution in the metal hardware world to support those workloads, the software that helps users get the most out of the hardware was missing.”

The pair took matters into their own hands, embarking on a mission to bring that software into the world. The goal was to help people build innovative, AI-powered solutions by fully leveraging the computing powers brought forth by the industry’s latest systems. Such systems include, for example, NVIDIA DGX servers (such as DGX-q1 based on the Ubuntu Linux Host OS) designed specifically for machine learning and deep learning operations.

“We’re approaching Run:AI in roughly three stages, the first being in the development of virtualization technology, which we have completed,” Omri said. “For the second part, in the first half of 2020, we had private betas where companies provided feedback on the product.”

On March 17, the company announced the completion of stage three, which involved scaling the software out of beta and into general availability. The Run:AI deep learning virtualization platform, which now supports Kubernetes, is officially ready to bring control and visibility to IT teams supporting data science initiatives.

“Deep learning is creating whole new industries and transforming old ones,” Omri said in a press release. “Now it’s time for computing to adapt to deep learning. Run:AI gives both IT and data scientists what they need to get the most out of their GPUs, so they can innovate and iterate their models faster to produce the advanced AI of the future.”

Addressing a Range of Resource Management Challenges

Now that it has hit the market, AI-focused organizations can integrate Run:AI’s technology into existing IT and data science workflows.

“The biggest priority for organizations that invest in AI is to bring solutions to market faster,” Omri said. “AI is bringing forth the next wave of competitive advantage for organizations, so time is an even more important business resource than money — and that’s where Run:AI helps.”

Omri said there is a high correlation between more compute power and faster time to market. Run:AI, therefore, is an ideal solution for companies looking to efficiently and effectively usher AI technology into the enterprise.

Run:AI pools compute resources that can be allocated across environments hosted on-prem and in the cloud.

But the benefits extend beyond GPU resources. As mentioned, both the IT and data science fields are notorious for skilled worker shortages. By optimizing the data scientist toolbox, AI companies can empower their existing staff to get more done.

“One of our customers used Run:AI for hyperparameter tuning,” Omri said. “In AI, you try many configurations to determine what will bring you the best results. They ran 6,700 configurations in parallel using our system. To accelerate further, they also tried five configurations on a single GPU. The manager of that group told us that they’d seen results of 25 times faster, which helps bring solutions to market faster.”

Omri told us that AI-focused companies typically spend a lot of money on expensive hardware resources. It follows that Run:AI can help organizations make the most of those purchases. “We help organizations to maintain control over their budgets, which is especially important in the financial environment we’re facing right now.”

A Road Map Dictated Entirely by User Demand

When it comes to internal development, Run:AI’s plans directly reflect user needs.

“We got to a point — and I hope we’ll always be at this point — where we don’t develop anything that did not come from customer demand. The tasks in our product road map include customer names under each of them. It’s great to be in that position.”

The computing platform is applicable in many use cases. The Run:AI team listens carefully to the customer’s resource management challenges before determining what capabilities are most relevant in each situation.

The team starts with GPU optimization, helping users consume GPUs more efficiently. Then they address common challenges. “A lot of customers are concerned with the process of building models — how you share the GPUs between the users so that every user will get GPUs without fighting over the resources,” Omri said.

Instead of assigning fixed GPUs to data scientists, Run:AI creates a pool of GPU resources and can automatically stretch workloads to run over multiple available GPUs. Users may give guaranteed quotas to essential jobs, directing prioritized workloads to available hardware first.

Now in Beta: Enhanced Insights via New Visibility Tools

As for what the future holds, Run:AI is focusing on an all-new visibility tool tailored to administrators.

“We significantly enhanced the way we analyze data on the consumption of resources, we provided insights for the organization, and we also helped them get historical data as well as future estimations on usage,” Omri told us.

The tool, currently in beta, is scheduled for release early this year.

“It’s a visibility tool that will have a lot of impact on the way employees manage resources,” Omri said.

HostingAdvice.com is a free online resource that offers valuable content and comparison services to users. To keep this resource 100% free, we receive compensation from many of the offers listed on the site. Along with key review factors, this compensation may impact how and where products appear across the site (including, for example, the order in which they appear). HostingAdvice.com does not include the entire universe of available offers. Editorial opinions expressed on the site are strictly our own and are not provided, endorsed, or approved by advertisers.

Our site is committed to publishing independent, accurate content guided by strict editorial guidelines. Before articles and reviews are published on our site, they undergo a thorough review process performed by a team of independent editors and subject-matter experts to ensure the content’s accuracy, timeliness, and impartiality. Our editorial team is separate and independent of our site’s advertisers, and the opinions they express on our site are their own. To read more about our team members and their editorial backgrounds, please visit our site’s About page.